Testing REST and Messaging with Spring Cloud Contract at DevSkiller

Testing REST and Messaging with Spring Cloud Contract at DevSkiller

Here at DevSkiller, we have been working with Spring Cloud Contract for a long time now in order to simplify designing the APIs and testing our system while, at the same time, keeping the communication between services working correctly. In fact, the members of our team have been among the creators, first users, and first contributors of the Accurest project that has later been adopted in Spring Cloud as Spring Cloud Contract. Over time, we have both adjusted the way we work with the contracts to the specifics of our team and our system, and have leveraged the contracts to make substantial changes in our architecture. This article is dedicated to some of the things we have learnt while working with this tool and the improvements we were able to introduce to our system thanks to having adopted the Consumer-Driven Contracts approach.

Working with internal APIs

A Local TDD Workflow

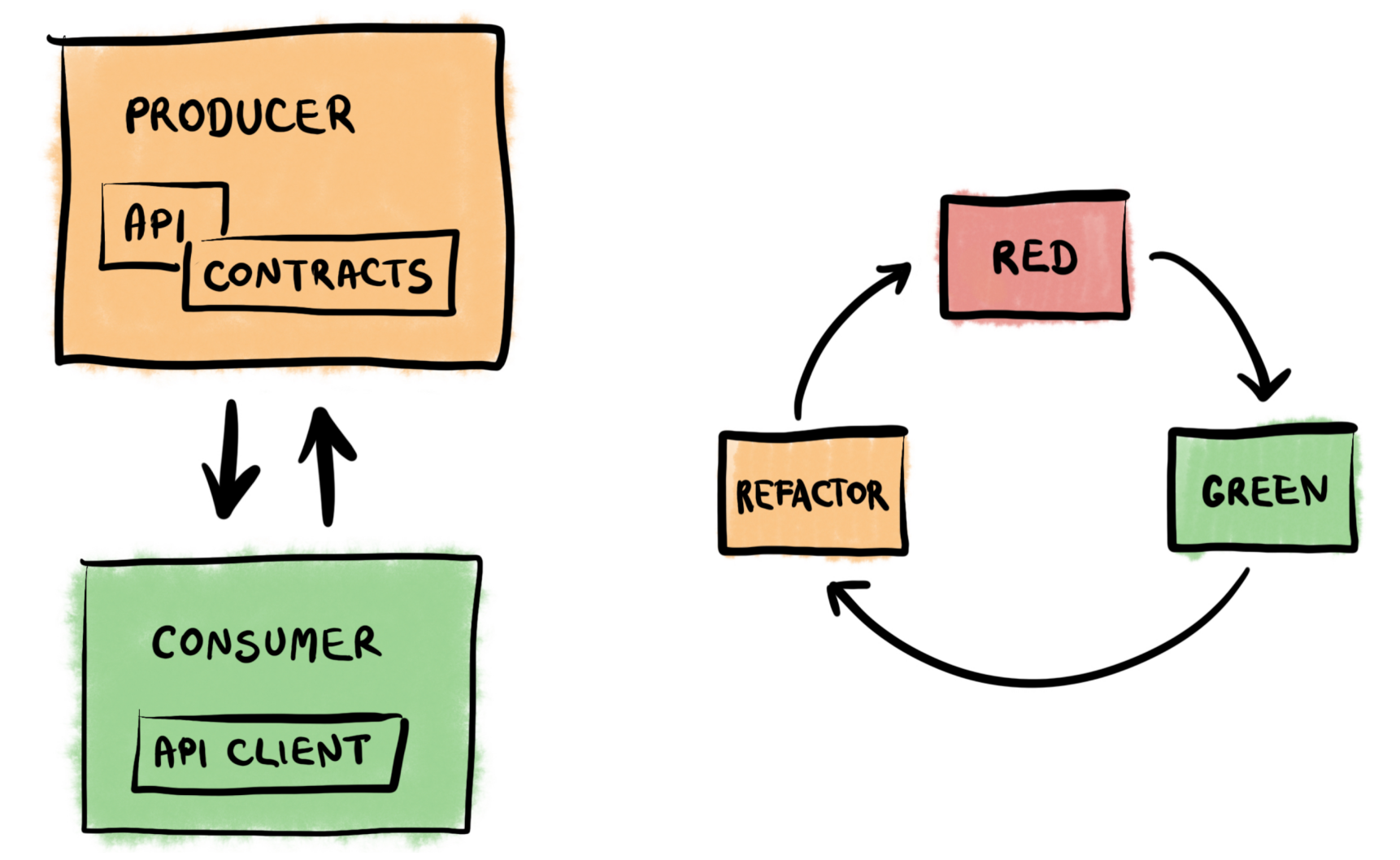

It is a common approach for companies to have teams centered around microservices or to have each team assume the ownership of one or more applications that the company develops. This approach, aside from many benefits, results in focusing on a single context instead of on the implemented functionality. So we chose the “feature approach”, where while working on a new functionality or a change in an existing one, an engineer will introduce changes in all the microservices affected by the modification. On rare occasions when this work is shared, usually one person will still work on both sides of the communication, i.e. the consumer-side client and the producer-side API and leave stubbed service methods to be implemented by someone else.

Whoever is working on the changes of the API and its consumer will usually check out both the repositories locally, start working on the client first, adding changes to the producer repo, generating stubs with skipped tests, testing and improving the client Once a change has been implemented on this side, they can them move to running tests, adding implementation and then refactoring on the producer side. Then they would proceed with more similar iterations for other changes.

This allows us, in practice, to adopt the TDD red-green-refactor approach on the API and architecture level. Even though we always start to change the APIs on the consumer side, we can allow for flexibility and adjust the changes on the go; for example, when we see that in the phase of changes on the producer endpoints’ something seems to be overly entangled or unnecessarily complex. The only thing to keep in mind here is that while skipping the producer tests when working locally can be quite useful, it should never, ever be done in deployment pipelines.

The Contracts’ Scope

Similar to E2E tests, which we only create for our most critical business scenarios, we don’t add contracts for every possible use-case or corner case. Granted, we cover many more scenarios with the contract tests than with the E2E tests. However, if we have an endpoint for a functionality that is used once in a few months, does not bring us considerable revenue, and our clients would not immediately experience difficulties we might skip adding contracts for it.

Likewise, we don’t define contracts for all the possible values. For instance, if we are adding a new value for an enum that is used in communication between services, rather than adding a new contract for the new value, we simply switch it to an existing one. That’s because the enum names usually don’t change and adding a separate contract for every value would simply be an overkill.

Backwards Compatibility Checks and Deferred Updates

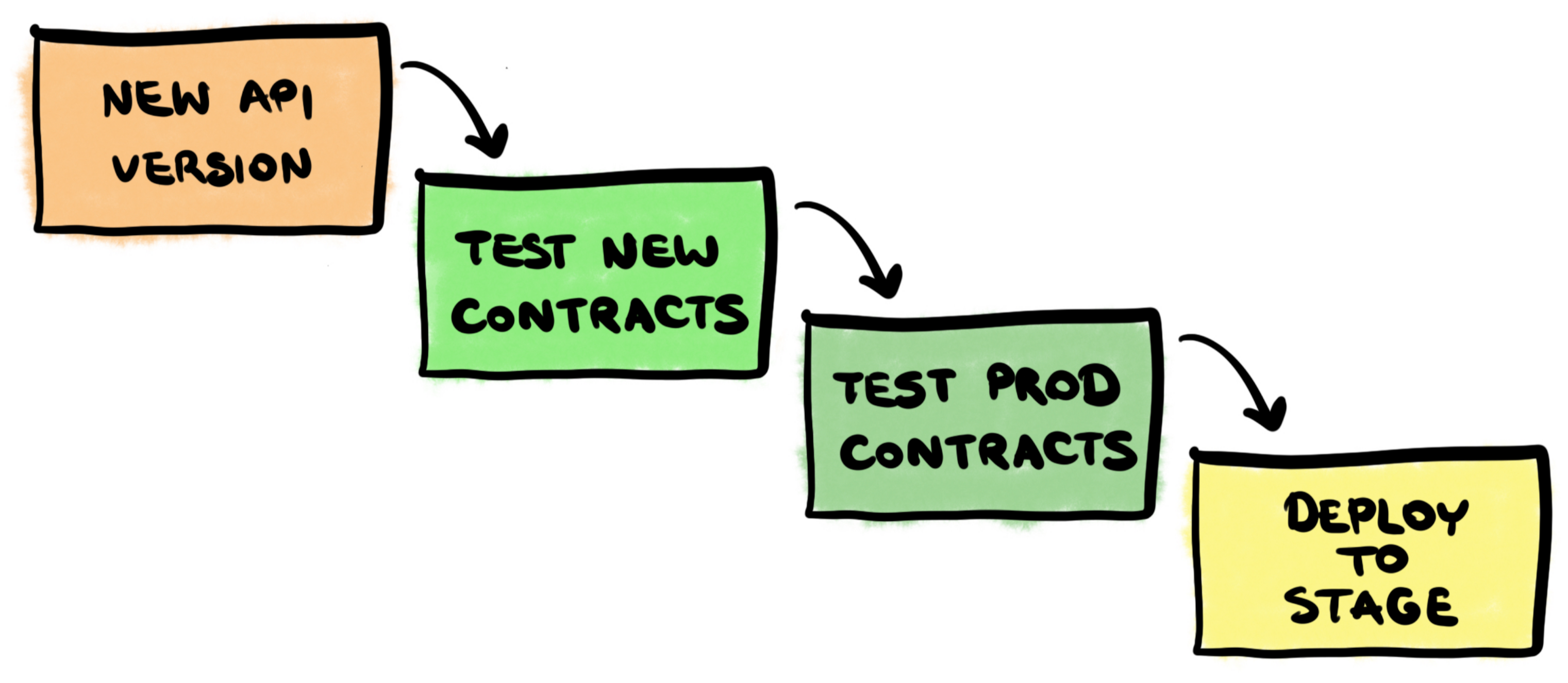

An important advantage of working with Spring Cloud Contract is being able to leverage the contracts as an easy mechanism for API backwards compatibility checks. It’s fairly simple - before any deployment we test the Producer applications not only against their current contracts but also against the contracts that are currently deployed to production. This allows us to be sure that we are not breaking the API with backwards incompatible changes.

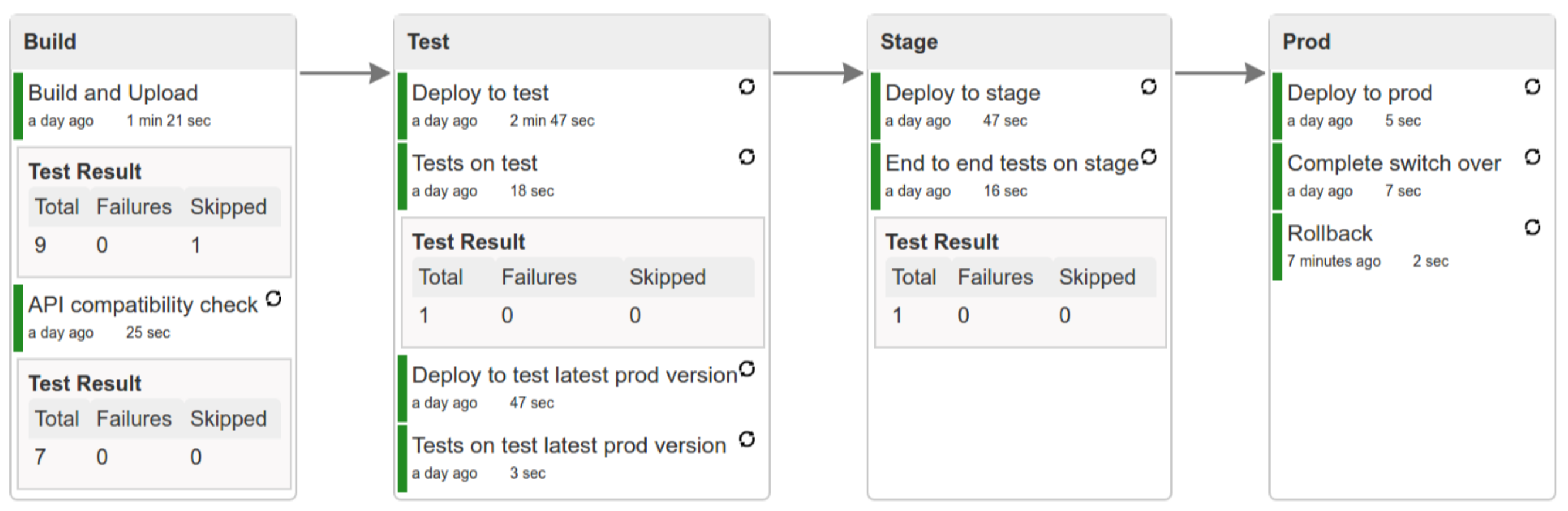

By the way, if you are interested in introducing this approach to your deployment pipelines, I suggest you have a look at the Spring Cloud Pipelines project that provides this functionality out of the box.

Source: https://spring.io/blog/2016/10/18/spring-cloud-pipelines

In Spring Cloud Pipelines after the project is built and the API compatibility is confirmed, it is deployed (including database schema migrations) and tested. After that, the rollback test is executed by deploying and running tests against the production version of the application. After deployment to stage, end to end tests can be triggered, followed by a zero-downtime rolling deployment to production. As the backwards compatibility of both API and database setup has been verified, the pipeline also allows for an easy rollback of the application’s previous versions in cases where any serious issues have been found in the production code.

No Shared DTOs

In the beginning, while developing the system, in order to stick to the DRY principle, we widely used DTOs and small libraries with canonical objects shared across various services. It seemed clean and coherent at first, but soon enough, we have learnt that in a distributed system, the avoidance of coupling and the ability to ensure autonomous implementation and deployment for each microservice plays a much bigger role than the avoidance of repetitions.

Thanks to contract testing, we don’t need to use the same data structures to pass around and store data in any of the collaborating services - in fact, as the services operate within different bounded contexts, it would probably be an anomaly if we needed them to be identical everywhere - we only had to know that the data was passed correctly during communications. So after adopting the contracts, we have stopped using shared DTOs altogether.

No Versioning

As we stopped sharing DTOs as dependencies and as we ensured that all our API changes were always backwards compatible, we have realised we do not really need to version our services either. We can just work on the most recent version and, as we can always be sure it’s backwards compatible with the way our system works currently in production, we can deploy new versions any time and at will - other services will be able to work with the newest version of our service out of the box. For that reason we have removed service versioning as well.

Unlike in the public APIs, where we usually would not make any major changes in the API without a version change, for

internal APIs we might want to switch things up from time to time. So when testing backwards compatibility, we don’t

want to ensure that modifications are never being made - we just want to be positive that they won’t break the

production. So how do we, for instance, switch the buildTime field that holds the times of our builds measured in

seconds for a buildTimeMillis field, holding the values expressed in milliseconds?

We use a technique usually referred to as deferred update. First, we just add the buildTimeMillis field along with

the -already existing- buildTime both to the contracts and to the API Producer application. We can now work with

consumer instances that use either the old or the new field value simultaneously. Secondly, we remove the old field

from the contracts. Now, when the engineers work on the API clients and execute their tests against the producer

stubs, the tests will fail and they will be able to see that they need to switch to using the buildTimeMillis field

in their applications in order to make the test pass. They will not have to rely on someone remembering to inform them

that they need to adjust their services to a change in the API. Finally, when all the services have been adjusted and

their fresh versions deployed to production, we can remove the buildTime field from the producer application as well.

The update is complete.

| Deployment | Contracts’ fields | Application fields |

|---|---|---|

| 0 | {"buildTime" : 5} |

{"buildTime" : 5} |

| 1 | {"buildTime" : 5,"buildTimeMillis" : 5000} |

{"buildTime" : 5,"buildTimeMillis" : 5000} |

| 2 | {"buildTimeMillis" : 5000} |

{"buildTime" : 5,"buildTimeMillis" : 5000} |

| 3 | {"buildTimeMillis" : 5000} |

{"buildTimeMillis" : 5000} |

Working with public APIs

A good public API should return the data that was agreed upon and should have a documentation that is easy to use and tightly linked with the code. We should have tests to ensure that the API is not being modified by mistake and we should be certain that our documentation reflects exactly what our code does. We have all faced the situations when external API providers have handed us human-written documentation and after we have implemented API clients according to it, it has appeared that there are discrepancies between the document and how the API really works. It’s so frustrating.

We definitely did not want the consumers or our APIs to ever have to face these kinds of problems with us, so we have used Spring Rest Docs to ensure that our API works correctly and is well documented. This neat solution allows you to first write the tests that define how your API should work and verify if the implementation is, indeed, correct and then to generate the API documentation automatically based on those tests. MockMvc, WebTestClient, and RestAssured are supported for the test generation, and there are additional methods available to pass descriptions and other context information that will be included in the documentation. The documentation is generated directly from the tests so we can be sure that our API works as expected and the documentation reflects the available functionalities.

Why am I writing about it in an article dedicated to contracts? One of the cool features here is that you can actually

generate stubs and/or contracts from your Spring Rest Docs setup.

And why would you need stubs for your public API? Many of your public API consumers might not implement

Spring Boot-based clients or wish to write integration tests using stubs, maybe they are just working on a frontend and

are using a completely different stack… The good news is they can still use the stubs to, for instance, prototype their

UI against them, with only a minimal setup required on their side. They will only need to have a command line terminal,

Maven installed and add just a few entries in their Maven settings.xml file, as described

in the plugin documentation.

It takes no more than a few minutes and then, they can just run mvn spring-cloud-contract:run -Dstubs="com.devskiller:sample-service" and stubrunner will be downloaded for them

and launched with a wiremock instance running underneath, populated with the stubs that you have previously published to

an artifact repository.

If you want to use Spring Cloud Contract for technologies other than JVM, you can also consider using the Spring Cloud Contract docker images that allow you to work with consumer-driven contracts in the stack of your choice.