Avoid unconscious bias in your tech recruitment process

The goal of the tech recruitment process is fundamentally about finding the best person for the job. The simple truth is that by eliminating unconscious bias in recruitment, you access more qualified candidates than you could before.

I think you would be hard-pressed to find a person in tech who goes into the recruitment process consciously giving into their biases. In the same way, I think you would be hard-pressed to find a hiker who goes into the mountains expecting to be saved by mountain rescue.

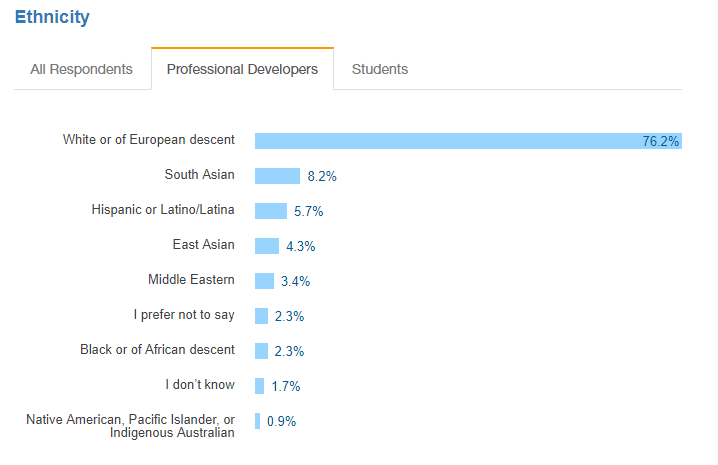

Unfortunately, hikers still get stranded and there is still evidence that the tech industry is rife with bias. The Stack Overflow 2017 Developer Survey found that their respondents who were professional developers were almost 90% male and almost 80% white.

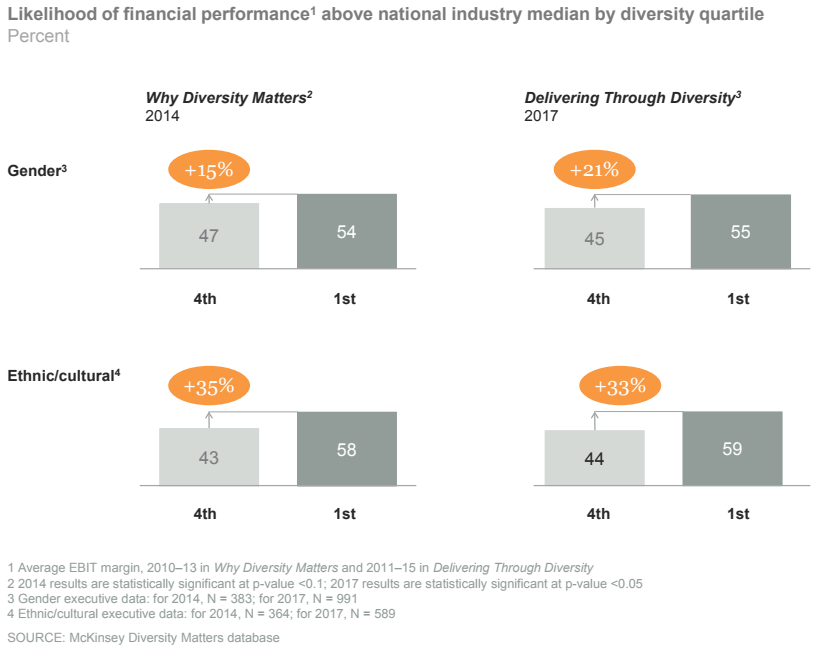

This is not ideal as more ethnically diverse companies outperform their non-diverse peers by 33% while gender-diverse companies outperform their peers by 21%. The first step towards seeing these benefits is to create as level a playing field as possible in tech hiring by eliminating every unconscious bias that can disadvantage candidates who aren’t white men.

Source: McKinsey

Source: McKinsey

The purpose of this post is not to scold but to give tech recruiters the tools necessary to eliminate as many biases as possible in their recruiting process, helping them recruit better candidates.

Recognize the unconscious bias in your process

To be human is to carry unconscious cognitive biases. They are hardwired into our systems as a leftover from when we were hunting on the savanna, a time when not listening to your intuition could make you lion food. But the conditions we face in the modern world mean that we are best served looking beyond our immediate group.

This is similar to our ancient drive to eat anything sweet we can get our hands on. Since sugar is much more available now than it ever was for our ancestors, we’ve had to recognize that too much of it is unhealthy and have adjusted our thinking accordingly. But before we could, we had to take the first step and recognize we have a sweet tooth.

In the same way, by recognizing our unconscious biases, we can take steps to remove them from our decision-making process or as Natasha Broomfield-Reid, Head of Development at Equal Approach says,

By having self-awareness and identifying our own biases and how they affect our behaviour, we can begin to slow down our thinking and challenge our decisions.

Source: theundercoverrecruiter.com

These six cognitive biases are a good place to start. If we don’t recognize them, they can have an effect on the tech hiring process by distorting our perception of the candidates we recruit.

Confirmation Bias

This is when we look for ways of making an initial judgment come true rather than seeking data objectively.

A common way this affects the tech hiring process is when an interviewer tailors their questions to each candidate to confirm the interviewer’s preconceived notions, according to Ji-A Min head data scientist at Ideal.

For instance, an older candidate might get a question about whether they sometimes spend 12-hours straight coding that may not be given to a younger candidate. This is because the interviewer feels that an older candidate might be less energetic while the younger candidate is never asked about their energy level.

Effective Heuristic

This is a fancy way of saying that an interview would judge a person’s suitability based on superficial factors, as explained by Wepow. This can manifest itself by assuming that an overweight candidate may not perform well as a more slender candidate, even though there is no connection between a person’s weight and their ability to code.

Expectation Anchor

Another way of saying this is unconscious favoritism. It occurs when for whatever reason an interviewer likes a certain candidate. Many companies have a multi-step hiring process which can be affected if an interviewer takes a shine to the candidate in the first round.

The subsequent rounds of the interview are supposed to provide more information about the candidates but the favored candidate may not receive the same scrutiny as the other candidates. Not only that, the other candidates end up being judged against the first candidate rather than on their own merits.

Similarity Attraction Effect

Ji-A Min describes this as the fact that we tend to seek others like ourselves. Now that might make sense if you are trying to attract people with similar values to yourself but with the tech industry being overwhelmingly white and male, it can end up excluding capable candidates who do not look like the interviewer.

It has gotten so bad in the tech industry that Vivian Giang says, “the percentage of underrepresented minorities [in Silicon Valley] is so low, employers shouldn’t trust their own judgment anymore.”

Gender Bias

This is a hot issue right now in tech for good reason. Women are being perceived differently than men despite routinely proving their capabilities.

Intuition

In other words, trusting your gut. Intuition may seem like a sign of wisdom and something that is celebrated in the tech world. After all, visionaries like Steve Jobs are celebrated for insisting, according to the New York Times that “Intuition is a very powerful thing, more powerful than intellect.” So why shouldn’t this be a part of your hiring process?

Because it simply heightens your unconscious bias. By shunning an objective framework for evaluating candidates, interviewers become more susceptible to their own biases. This is borne out in research from Hays which found that the more recruitment experience a recruiter has, the more bias they exhibit.

Will recognizing your biases fix the process on their own? No, but it’s a good first step. As a Deloitte Human Resources Director said:

We all thought we were incredibly fair and then we tested our data against gender, age and full-time vs part-time. Simply instituting a process to test the data has been enough to get people to stop, think and test their decision making. It has been a really cathartic process for people. We didn’t realize we could be making biased decisions.

So once you are aware of the biases that you carry with you, it is important to mitigate their effects and it all starts at the beginning of the tech recruitment process.

Make the language of your job ads gender neutral

Most jobs outside of wet nurse are not naturally gendered or carry gender specific qualifications and in tech, there is nothing that is done better by one gender or the other. It may surprise you though to find out then that the language of your job ads can single gender preference.

We have already talked elsewhere on this blog about the importance of removing gendered pronouns from your ads while not describing a frat house atmosphere that is meant to appeal to young men over women with families.

But beyond that, a study conducted by the University of Waterloo and Duke University found that masculine words (like leader, competitive, and dominant) showed up in the advertisements of male-dominated fields like Computer programming much more often than in job advertisements for female-dominated fields.

What this means is dropping coding ninja from your job ads and instead use proficient coder. According to the Carmen Nobel at the Harvard Business Review, a common reason these adjective come up is because of baroque job descriptions that demand a ton of adjectives. In addition to removing these adjectives from your ad, keep your job descriptions to only the necessary information to avoid needing to stuff them with gendered descriptions.

Do anonymized coding tests before the interview

It is neither wise nor usually possible to avoid an in-person interview before making a hire, but I think it does level the playing field if you do as much evaluation as you can before that in-person interview.

William Gadea, IdeaRocket

Source: Recruiter.com

No matter how objective we try to be, there will always be a point in the recruitment process when we have to meet somebody face to face. This is a prime opportunity for all of our cognitive biases to take hold if we aren’t careful.

There is ample evidence to suggest that in the US, Canada, and France, a candidate with otherwise equal qualification gets called back up to 50% more often for initial interviews if they have “white” sounding names (or in the case of France non-foreign). This effect is also felt when it comes to male and female names, with “male” CVs seen as being significantly more competent and hireable than female CVs.

One of the best ways to mitigate this effect is to gather as much anonymous data as possible before inviting candidates for interviews. Rather than trying to judge somebody’s competencies based on a CV with extraneous cultural and gender information, give your prospects an objective and anonymous test.

For technical hires, the most important thing to find out is whether they can actually produce powerful clean efficient solutions to your business problems within a deadline. The best way to do this is with a first day of work test, with anonymized results reports which can be sent to recruiters or developers, like the ones offered by DevSkiller.

Source: DevSkiller

Source: DevSkiller

According to Laszlo Bock, former Senior Vice president of People Operations at Google, work sample tests given, provide the best possible indication of future performance. It is important to give your devs a programming task which mirrors what they will have on their first day of work, using all of the tools and resources they would normally use.

This is the best objective indication of their ability to do the job. You can have each one of your dev candidates take one of these tests. Only invite ones that you know can do the job to the interview process. That way, when you finally meet them face to face, there is no doubt that they can do the job, limiting your reliance on intuition or other biases.

Standardize the interview process

Consistency is key in removing bias from hiring processes.

Robin Schwartz, MFG Jobs

Source: recruiter.com

You should only be interviewing dev’s you know are technically competent. The interview process should be used exclusively to figure out motivation and cultural fit. Of course, these are subjective ideas and there is a real danger for the interviewer’s biases to creep in. Probably the best thing you can do is to standardize the interview process.

Lilly Zhang, a career development specialist at MIT explains why and how you should do this. The biggest is probably confirmation bias. As we explained before, When you give a unique interview to each candidate you start looking for different things from each. As Ji-A Min put it, your data doesn’t match and you end up comparing apples to oranges. By giving everybody the same interview, you can then compare results equally.

Take good notes

It is tempting to size up a candidate in the first few moments of meeting them but this has the effect of setting an expectation anchor. By writing notes, you can refer to exactly what the candidate said rather than relying on your overall impression of them. That way your first impression of them is based on everything they say, not just what they looked like when they walked through the door.

Use a Rubric

Decide what criteria you are going to use and stick to it for every candidate. To do things you will need a standard list of questions. This way you can be assured that you get the same information from each person and you graded them on the same scale rather than creating an expectation anchor.

Even if something subjective like likability is important for your hiring process, assign a numeric value to it. That way, you aren’t relying on vague impressions but numbers that you can compare between the candidates.

Justify your decision

The reason why you have notes on a standard set of questions is that you should be able to defend every decision you make with the evidence you’ve collected. Since you’ve looked for the same piece of information from reach dev candidate, you can justify why the answer one dev gave would be better than the answer from another dev.

Do away with elite fast tracks

Elite fast tracks, which excuse certain preferred candidates from the initial screening stages and take them directly to the last interviews, are just breeding grounds for expectation anchors and similarity bias. It has created the situation where only strangers have to go through a coding test while fiends of the current team get fast tracked.

This means that the burden of proving yourself is often put on minorities while their white counterparts are fast tracked. After all. 76.2% of the professional developers who responded to the 2017 Stack Overflow Developer Survey were white while in the US ¾ of white people don’t have any non-white friends. This means that proportionally, the majority of the recipients of elite fast tracks in Tech are going to be white.

Source: Stack Overflow

Especially in tech, everyone should go through a technical screen. If the preferred candidate is really as good as you think, they should have no problem proving that they are technically competent.

Assign a diverse group to interview the candidates

Despite your best efforts, you may just have a hunch about a candidate, a trap for those who rely on intuition. To avoid this coloring the hiring decision, have a diverse group take part in the interview. They will each come with their own impressions preventing one person’s biases from coloring their impressions of the candidate.

Practices to avoid

The best way to eliminate unconscious bias is to only focus on the information essential to choosing a dev while removing as many other factors as possible from the process. There is such thing as too much information. Here are some practices to avoid which distort the data you use for your hiring decision.

Pressure unrelated to the job

Your technical screen should test how you dev does under real-world conditions. In the real-world, they would normally have an idea of what kind of task they would need to do. They would also be able to use tools they were already familiar with to do the job. Asking them to do a random task on an unfamiliar platform adds stresses that have nothing to do with their skill.

Given them some prep to explain what the test will be like and let them use their own IDE. This will give you the purest data about their abilities.

Academic credentials

These and other indicators are less relevant in tech than the ultimate technical screen. It may sound great to say you source your candidates from elite institutions but as David Lopes from Badger Maps says,

Most people aren’t fortunate enough to go to Stanford or University of California, Berkeley, but they are just as ready, qualified, and eager as their counterparts.

Source: Recruiter.com

Your unconscious bias doesn’t own you

Even the most even-handed person has cognitive biases that they need to account for. This does not make you bad, it simply makes you human. There is a big diversity problem in tech right now so learning how to account for your biases and eliminate them from the hiring process will get you close to hiring the best candidate every time.